In an increasingly digital and interconnected world, understanding human emotions has become a critical aspect of various industries. Emotions significantly impact our decision-making processes, behavior, and overall experience. Marketing departments are particularly interested in leveraging emotion detection technology to gain insights into consumer preferences, optimize advertising strategies, and enhance user experiences. This project report focuses on the development and implementation of an emotion detection system capable of analyzing images to identify the underlying emotions expressed by individuals.

Conception

To solve this task, I decided to train a convolutional neural network (CNN) with a public dataset consisting of images of faces categorized into seven different emotions. To get a viable solution, the concept of transfer learning will be employed. This should result in a solution with state-of-the-art accuracy regarding the classification of emotions.

Basic Frameworks

The primary frameworks for implementing the emotion recognition solution will be TensorFlow and OpenCV. TensorFlow is an open-source library that makes it easy to train, test, and develop neural networks. The name TensorFlow stems from the use of multidimensional data (tensors) as input data sent through several intermediate layers. These layers can be individually selected to build up a neuronal network architecture. Additionally, the library also contains several pre-trained models which can be used for transfer learning. The second library, OpenCV, which is a computer vision library, features a lot of useful algorithms that will be used to extract faces and manipulate images for the inference step.

Transfer Learning and Model Choice

With transfer learning, it is possible to use an existing model to solve different but related problems to the original purpose the model was conceived for. The model’s pre-trained weights are reused, and the architecture of the original model is slightly altered. Transfer learning conserves time, generally results in better performance, and shrinks the scale of necessary training data sets. The model that the emotion classifier for this project is based on is the VGG19 model. The VGG19 is thereby a deep CNN model, which means that the model consists of multiple layers, including a 1000 neurons dense output layer. This is due to the fact that VGG19 was trained to classify 1000 different categories. To fit the model for the use case of detecting emotions, the original output layers, including the 1000-neuron dense layer, will be swapped with new output layers corresponding to the number of emotions that we want to identify. Additionally, we lock the original weights of the VGG19 model and train only our modified layers on the emotion dataset.

Dataset Selection and Issues

To train the model for emotion recognition, the FER2013 dataset, which consists of around 30000 images of facial expressions, will be used. The images are thereby classified into seven different categories of emotion. The dataset also features around 3500 test images for validation of the trained model. Although the dataset features a reasonable amount of images for training, there is the problem of some categories being under and over-represented. This introduces the problem of bias, as, for example, the Happy category contains around 7000 images and the Disgust category features only around 400 images. To combat this issue, the data will be augmented, for example, by flipping, rotating, or cropping images. Here is the link to the dataset.

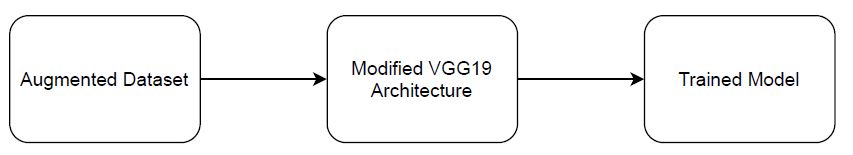

Model Training and Inference

The overall process of implementing the solution for emotion recognition can be split up into two distinct parts, the model creation, and the inference part. The model creation procedure consists thereby of augmenting the dataset, setting up the training environment and architecture with TensorFlow, and then starting the training process.

The second step, which presents the actual solution for making a prediction, consists of the trained model, which is loaded into a Jupyter Notebook for inference. The process of inference consists thereby of loading an image into a Jupyter Notebook, extracting the face with OpenCV, preprocessing the image, and passing the image to the trained model for prediction. The prediction is visualized as the image with the classified emotion written on it.

Development

After creating a concept, the next step was to start the Implementation. This PDF explains the development process in detail.

Conclusion

Looking back on the creation of the emotion classifier, I can say that this project was a very pleasant and rewarding learning experience, regarding computer vision and the development workflow of deep learning models. Given the issues regarding data quality, bias-variance problems during training, hardware limitations, and the trial and error of hyperparameter tuning, the task also proved to be more difficult than initially thought. As the saying goes, the devil lies in the detail, and I can now understand why training deep learning models is by a lot of machine learning practitioners considered a mixture of art and science.

Personally, I am satisfied with the final result, although I wished for a bit higher accuracy and more emotions. To improve upon this work, a better dataset like AffectNet which has around one million images could be used to train a model on better hardware like TPUs. Grid-search for finding the best parameters and model structure could also be used to get an overall better performance. The code for this project can be found in this repository.

[…] else. The approach to training this model is the same as the one that I have already used for the facial emotion classifier. Given the relatively simple task for the modified VGG19, because the two image categories are […]